Note: this is the second post of a two-part series about the prototype of Marti. This post presents an analysis of the program’s modules and a roadmap for future improvements for the project, while the previous post focuses on the present state of Marti.

By taking the time to first build out a prototype of Marti and then methodically analyze its different components, I was able to come to data-informed conclusions about which modules to prioritize and improve. The analysis and roadmap that I present below focus on the key refinement priorities: latency, fluency, and intelligence. After analyzing the performance of each of Marti’s modules according to these three priorities, I’ll share what I plan to do from here to improve each of the modules.

Hear

To learn about what’s in the current version of the “hear” module, check out the corresponding section in the Marti v0: Present post.

Analysis

Examining the prototype “hear” module through the lenses of latency and fluency, it’s clear that there are opportunities for improvement. Despite functioning well enough for this prototype, the system is both too slow and not good enough at understanding speakers.

Latency

The easiest aspect of the module to evaluate is latency. I divided the module into two components to measure their associated latencies: a “listening” component (where the program is recording the user’s speech) and a “recognizing” component (where the program sends the recording to the Google Speech Recognition API). For now, these two parts of the “hear” module need to run sequentially, though I’ve seen that there are some transcription APIs that can run in streaming mode, meaning that it starts to transcribe the user’s speech before they’ve even finished talking.

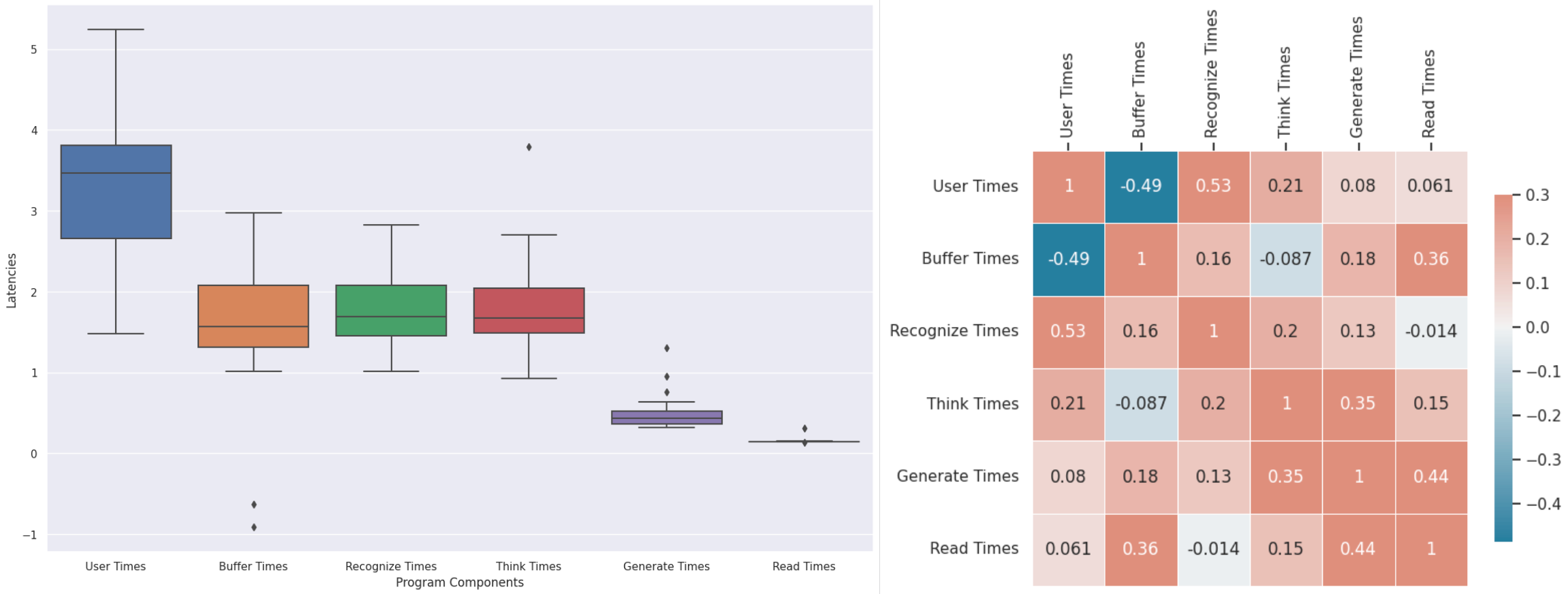

What I found from my analysis of the latency was that a major latency drag in the “hear” module was actually a function of the user interface. The plot above shows three components of latency in the “hear” module: “User Times” (which represents the amount of time that I spent speaking into the microphone), “Buffer Times” (which represents the amount of time that the computer spent waiting to see if I’d say more after I’d finish speaking), and “Recognize Times” (which represents the amount of time that it took for the program to send the audio to Google and get a response).

We can ignore “User Times” as a source of latency since users do not expect a program to start responding sooner than they’ve finished talking. However, we can see that buffer times were, on average, about as long as recognition times. This means that the program took as much time waiting for me to finish talking (even though I’d already finished) as it did figuring out what I had said.

In the “Where to go from here” section below, I’ll discuss how we can potentially decrease the latency from both of these factors.

Fluency

Although harder to quantify and measure than latency, the fluency of the module was also distinctly lacking. When I spoke clearly and slowly in simple Spanish, the text that appeared on the screen matched what I was saying with a near-zero word error rate.

However, as soon as I started speaking faster, less distinctly (which is common in many dialects of Spanish), or using even intermediate-complexity words, the system would start misapprehending what I said. Less commonly used words were incredibly perplexing to the module, which would assume that I was speaking some mix of English and Spanish when it couldn’t recognize the Spanish words I used.

Especially for beginner speakers, pronunciation can be challenging. For a system designed to teach a language, recognizing a mispronunciation and the intention of a student isn’t just a requirement, it’s an opportunity to help the student improve in a key area of learning a language.

Where to go from here

Having recognized the shortcomings of the solution that I’ve come up with, the plan from here is to experiment with different options for speech-to-text that will optimize for both latency and fluency. There are four areas of exploration that I will pursue to see if I can improve the hearing module:

Testing on-device speech recognition models to improve fluency and recognition latency

Trying alternative managed services to improve fluency

Using a “streaming mode” to decrease recognition latency

Designing a better UI to decrease “buffer” latency

On-device speech recognition

One potential way to improve latency is to use on-device speech recognition capabilities, like OpenAI’s Whisper. This would decrease network latencies and prevent getting caught in a queue behind other requests. However, there are disadvantages to running more software on the device, such as memory and CPU constraints. These on-device models may also be less capable than models accessible as managed services, so this may end up making the fluency even worse.

Speech recognition managed services

Experimenting with different managed services is a good way to test out different fluencies. Managed services focusing specifically on multilingual/polyglot models or on models trained specifically to the language that the learner wants may also be a good option. While these sometimes carry associated costs, they are often inexpensive, with many costing pennies to single-digit dollars per hour of transcription. It’s unclear whether other services will have lower or higher latencies, but it’s worth experimenting with them to see.

Using a “streaming mode”

Some services (and potentially self-hosted models) allow developers to stream audio data into the transcription service as it’s being generated. Right now, the program runs completely sequentially: first, the audio gets recorded, then it gets transcribed. This streaming mode could accelerate processing by moving to an asynchronous system, where recording audio and processing recorded audio happen in parallel.

As we can see in the correlation matrix above, there is a very strong correlation (correlation of 0.53) between “User Times” (the amount of time that a user spends talking to the computer) and “Recognize Times” (the amount of time that the program needs in order to convert audio into text). Moving to a streaming mode would be especially helpful for decreasing latency when a user talks for a longer time, as those longer talks would also take proportionally longer to process.

A better UI

Given that around half of the latency in the “hearing” module comes as a result of the buffer time, where the program is waiting for the user to finish talking, another fruitful avenue for decreasing latency may be finding a better interface for users. This buffer time is useful in making sure that the user is done talking before the program stops listening, but there may be ways to avoid this cost without sacrificing the program’s patience. In a future post on other solutions, I’ll highlight I Am Sophie as an example of a user interface that does a good job of minimizing this latency.

Think

To learn about what’s in the current version of the “think” module, check out the corresponding section in the Marti v0: Present post.

Analysis

The “think” module can be improved by thinking both about the latency and the intelligence of the responses. In the world of machine learning, there’s often a speed-accuracy tradeoff (holding hardware fixed) because larger models both require more computation and produce better-fitted results. However, there’s no reason to think that the current solution I have is Pareto efficient, and it’s likely that there’s an opportunity for a Pareto improvement, i.e. one that improves either intelligence or latency without harming the other, or that improves both simultaneously.

Latency

Unfortunately, because I’ve only tested an external service for the “thinking” module, it’s impossible for me to do a breakdown of the latency for this module. Latency could be caused by network latency (the time it takes to send the request to OpenAI’s servers and for the response to come back), queueing behind other requests by other users, or simply the time that it takes for the algorithm to process the input and generate an output.

What I found was that the “thinking time” that the entire module took was generally between 1 and 2.5 seconds. While this is by no means as fast as we’d like it to be, and there are likely opportunities for improvement, this is far from the biggest drag on the program’s overall performance. And, given, how complicated the task of generating output is, it’s actually surprising that it takes this little time for OpenAI to return a result.

Intelligence

For the fact that I did nearly-zero prompt engineering and used the first LLM I could think of for this, the results were surprisingly intelligent. Although the system both incorrectly attempts to correct some non-errors in my Spanish and fails to catch some errors that I intentionally introduced, overall it was able to correct many simple mistakes and provided a good conversational partner.

Given the challenges discussed above in the Fluency section for the hear module, it wasn’t possible to perfectly evaluate the intelligence of the think module. Improving the hear module is a prerequisite for being able to see how the think module responds to more complicated words and phrases.

The fact that the system is memoryless also contributed to my perception that the think module has insufficient intelligence. This memorylessness occurred because I was passing a new request to GPT-3 each time I spoke to my computer, without any reference to the prior conversation. This meant that we could easily get caught in conversational loops and more broadly that the instructor wouldn’t be able to grow with me as a student.

Where to go from here

There are a few different directions to explore in replacing the think module to improve the intelligence of the system since the latency is acceptable:

Prompt engineering for the current model

Changing the model to a different managed service offering (e.g. Cohere)

Changing the model to an open-source model (e.g. BLOOM on Hugging Face)

Experiment with options for adding memory

Prompt engineering

Of the ways to improve the intelligence of the LLM, prompt engineering is the lowest-hanging fruit and I’ll likely need to do it regardless of the model that I end up selecting. Although LLMs have shockingly good out-of-the-box performance, for a highly-specialized task like language instruction, they’ll need some guidance on how to respond appropriately.

Managed services

Testing different managed service offerings for LLMs is also pretty straightforward, though likely won’t result in significant improvements compared to OpenAI’s GPT-3. OpenAI is on the bleeding edge of LLM development and GPT-3 is their state-of-the-art model, so even if other providers’ solutions perform better for our particular use case, it’s unlikely to be a significant improvement. Still, there may be a model that’s better suited for the particular language instruction use case that we have.

Open-source models

Testing open-sourced models would likely take the most work but also potentially be the most fruitful. By having direct access to the models, I could experiment with reward learning and other techniques to fine-tune the performance of models for our purpose.

While the other two options will likely have little-to-no impact on the latency of the thinking module, changing to an open-source model opens up the possibility of self-hosting the model instead of relying on a managed service for it. This could impact the latency in either direction, similar to self-hosting the speech recognition component. Fortunately, services like Hugging Face allow users to choose between self-managing a model or using a managed-service API for it.

Adding memory

The final possibility for improvement of the model comes with adding memory to it. Adding memory would allow the system to be more intelligent because the instructor could remember the conversation (as well as potentially past conversations), thus allowing it to avoid conversational loops or repeating topics. A really advanced AI could also use memory to identify the student’s weak areas and help them improve over time.

From a brief exploration of the space, it looks like there’s a lot of work being done to improve model memory. It seems like good work is being done by LangChain, which is focused on turning LLMs into chatbots by chaining together multiple calls to the LLM and introducing memory. Additionally, a friend of a friend did some work on long-term memory for LLMs, extracting important information from the conversation and storing it in an entity store.

It looks like this topic is on the bleeding edge of the conversation around turning LLMs from toys into functional products, so I’m excited to dive deeper and understand what work has already been done here.

Speak

To learn about what’s in the current version of the “speak” module, check out the corresponding section in the Marti v0: Present post.

Analysis

The speak module performed the best in my analysis of the three modules, with very low latencies and good-enough fluency. Although I think this module could be improved by finding an option that sounds less robotic and more fluent when speaking Spanish (or other foreign languages), the current option works well enough that this is a lower priority.

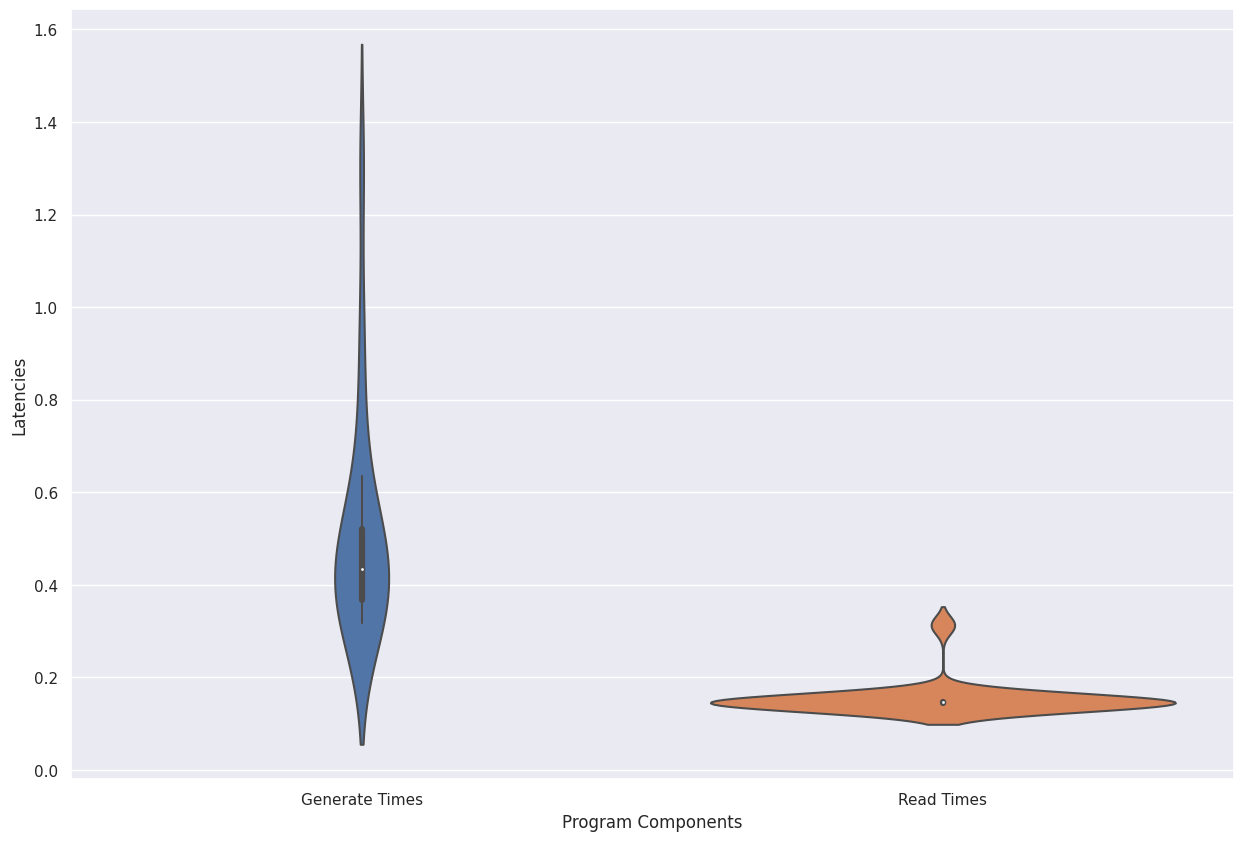

Latency

This module really shines when it comes to latency. It generally takes under a second to generate an audio file from text and then read that file into memory so that it can be played. Although there were some outliers where the time-to-generate was closer to 1.5 seconds, in the grand scheme of things, this module is barely contributing to the latency of the program overall.

When testing the module on longer pieces of text (e.g. the introduction to Don Quixote, attached in the Fluency section, took almost 15 seconds to generate), I did find that there was a strong correlation between the length of the text and how long it took to generate the audio for it. This may arise as a problem later if the instructor starts producing long textual responses, but for now, it doesn’t seem to be an issue.

Fluency

As discussed above, the fluency of the program isn’t perfect, but it’s certainly not the worst part of the prototype. Because TTS has the option to set a language, there’s a dedicated Spanish-oriented voice, which can handle the sounds and pronunciation of the language well. Although there is still a certain robotic quality to the voice, this area of the program seems to be the best performing.

Where to go from here

Since this module already executes quickly and has decent fluency, it’s not a high priority for retooling. It’s worth exploring more managed services as well as on-device models for text-to-speech, but, for now, effort on this module will be on the back burner while I get the other two modules up to scratch.

Conclusion

Phewph. If you’ve made it this far, I laud your ability to stay patient through my ramblings.

The idea for Marti AI is an exciting one and the work on it so far is promising. Although there is still a long way to go before Marti is genuinely capable of augmenting human language teachers, and although it’s possible that AI technology simply hasn’t evolved to the point where Marti can be effective as a teacher, the road ahead and paths for exploration are clear to me.

If you’re interested in following along on this journey of developing Marti, please subscribe: